Why Are Headers and Footers Crucial for Data Integrity?

Headers and footers are essential parts of data structuring because they give control information that is essential for guaranteeing the security, integrity, and usefulness of data. Headers and footers play an essential role as introductory and concluding elements in a variety of written and digital contexts, including protocols for networks, digital documents, and structured datasets. To help systems and users with data processing, transmission, and validation, they enclose data with relevant information, control fields, and, in certain instances, authentication markers. These components provide data traceability across systems, improve readability, and decrease the likelihood of data loss by outlining clear organizational boundaries. Safe and dependable data management is based on their essential role.

Why Are Headers and Footers Important in Digital Documentation?

Improving Readability and User Experience

Digital documents benefit from headers and footers because they provide the necessary context and structure, making the papers easier to read and use. Document titles, section headings, and author names are common header information that aids readers in understanding the document’s purpose and navigating its content efficiently. In turn, footers facilitate document browsing and consultation by providing page numbers, dates, or file references. Especially for long papers, this uniform framework provides a presentation that is easy on the eyes and the mind. In digital documents, headers and footers aid accessibility, usability, and effectiveness by directing readers and producing a professional layout.

Headers and Footers in Automated Data Processing

Headers and footers are crucial components of automated data processing because they help systems understand data structures accurately and keep processing accuracy high. The headers include important information such as the data’s origin, encryption level, and formatting, which automated processes can use to understand and handle each data segment correctly. Footers include validation codes and closing markers to guarantee accurate processing and full data integrity. Headers and footers work in tandem to make data handling effortless and to promote harmonious system interoperability with little to no human involvement. When dealing with large amounts of data, this automated framework is critical for maintaining consistency, efficiency, and correctness.

Enhancing Data Traceability Across Documents

Headers and footers enhance data traceability by including crucial information that enables easy tracking of each document version or data packet. Information about the creation and modification of a document or data file can be found in its headers, which often include metadata like version numbers, timestamps, and user IDs. When you put unique identifiers or links to similar files in the footer, cross-referencing is much easier. Because each step of a document’s lifetime must be verified, this traceability is crucial for auditing, quality assurance, and regulatory compliance. Documents become more transparent and traceable across systems when tracking information is consolidated in headers and footers.

What Role Do Headers and Footers Play in Data Organization?

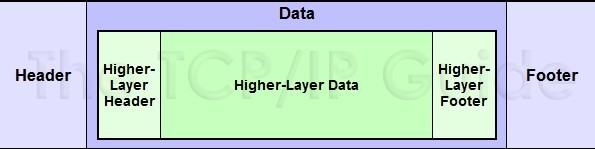

Defining Headers and Footers for Structured Data

Headers and footers are the building blocks of a dataset that provide structure and organization to the data contained within. Usually found before data, a header contains control fields and metadata that govern the processing and interpretation of the data. If included, the footer follows the body of the document and often contains any remaining verification or control fields. Despite the clear “difference between header and footer,” both work in tandem to create a cohesive structure, establishing boundaries that help both systems and users to segment, navigate, and organize data effectively across formats.

How Headers and Footers Support Data Accuracy

Data transmissions and structures benefit greatly from headers and footers because they incorporate crucial validation points and control information, greatly improving data accuracy. To ensure correct data interpretation, a header may provide important facts about the data’s protocol, format, or source. To ensure that data has not been distorted or altered during transmission, error detection fields like checksums or cyclic redundancy checks are sometimes included in footers. All of these parts work together to create a data structure that checks itself for accuracy and reliability. When dealing with critical information in contexts such as automated data processing and network communications, this verification approach is absolutely necessary.

Organizing Data for Easier Accessibility and Analysis

By offering a logical structure for dividing and defining data segments, headers and footers play a crucial role in organizing data for easy access and analysis. Headers provide immediate context that helps with interpreting and arranging the data by encapsulating metadata, which might include titles, timestamps, and unique identifiers. Footers, on the other hand, can summarize information or provide validation fields that indicate the conclusion of a data sequence. Data is more easily read and searchable with headers and footers in place, making it easier to find, retrieve, and analyze important information. This organization really shines in efficiently managing large or complicated data sets.

How Do Headers and Footers Enhance Data Security and Reliability?

Protecting Data Integrity through Standardization

The use of headers and footers helps guarantee trustworthy data processing by providing consistent structures that are applicable to all protocols and data formats. Headers often contain control information like protocol standards or data origin IDs, which systems use to identify and handle the data in a predetermined way. After that, footers ensure data integrity by indicating when a message has ended and, in many cases, by including checksums or error-detection codes. Systems are able to work together more effectively by following certain standards, which prevent data corruption and illegal changes. This uniformity ensures that data is reliable in all contexts, which increases its credibility.

The Role of Metadata in Data Authentication

Metadata, which is located in the headers and footers, is vital for data authentication and believability. In order for receiving systems to confirm the data’s legitimacy before processing, headers usually include necessary metadata such as source details, data type, and destination. As a countermeasure against data tampering during transmission, footers frequently contain security codes such as encryption keys or hash values. Headers and footers work together to verify data, protecting the sender’s and receiver’s identities. Systems are able to function with the assurance that the data is legitimate and accurate thanks to this metadata-driven authentication, which enhances data security.

How Headers and Footers Help Prevent Data Loss

Headers and footers help prevent data loss by creating distinct sections and incorporating integrity checks into the data structure. Data is steered via its storage or transmission journey by headers, which supply crucial control information like file format specifications and routing details. Verification fields, such as checksums, close the message sequence in footers, which are commonly used in networking protocols. They indicate that all data has arrived undamaged. It is very important to use headers and footers in high-volume or complicated data flows to validate the content integrity and clearly define data boundaries to avoid inadvertent data loss. Even little losses can impact the system’s correctness and reliability.

Conclusion

Data management would be incomplete without headers and footers, which improve data organization, traceability, and security across different data types. They provide a trustworthy and well-organized framework for the data content, which contributes to the accuracy and dependability of the data. These components ensure that data remains intact, allow for data verification, and facilitate the smooth transfer of information between systems in both document management and networking. The data is better protected from mistakes and illegal changes, and automated processing is more reliable, all thanks to this standardization. An essential component of any safe, structured data environment, their job is to keep data intact.